Machine Learning

From translation apps to autonomous vehicles, all powers with Machine Learning. It offers a way to solve problems and answer complex questions. It is basically a process of training a piece of software called an algorithm or model, to make useful predictions from data. This article discusses the categories of machine learning problems, and terminologies used in the field of machine learning.

Types of machine learning problems

There are various ways to classify machine learning problems. Here, we discuss the most obvious ones.

1. On basis of the nature of the learning “signal” or “feedback” available to a learning system

- Supervised learning: The model or algorithm is presented with example inputs and their desired outputs and then finds patterns and connections between the input and the output. The goal is to learn a general rule that maps inputs to outputs. The training process continues until the model achieves the desired level of accuracy on the training data. Some real-life examples are:

- Image Classification: You train with images/labels. Then in the future, you give a new image expecting that the computer will recognize the new object.

- Market Prediction/Regression: You train the computer with historical market data and ask the computer to predict the new price in the future.

- Unsupervised learning: No labels are given to the learning algorithm, leaving it on its own to find structure in its input. It is used for clustering populations in different groups. Unsupervised learning can be a goal in itself (discovering hidden patterns in data).

- Clustering: You ask the computer to separate similar data into clusters, this is essential in research and science.

- High-Dimension Visualization: Use the computer to help us visualize high-dimension data.

- Generative Models: After a model captures the probability distribution of your input data, it will be able to generate more data. This can be very useful to make your classifier more robust.

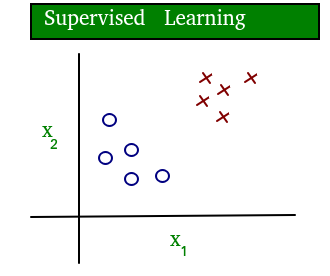

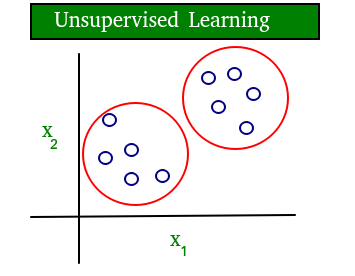

A simple diagram that clears the concept of supervised and unsupervised learning is shown below:

As you can see clearly, the data in supervised learning is labeled, whereas data in unsupervised learning is unlabelled.

- Semi-supervised learning: Problems where you have a large amount of input data and only some of the data is labeled, are called semi-supervised learning problems. These problems sit in between both supervised and unsupervised learning. For example, a photo archive where only some of the images are labeled, (e.g. dog, cat, person) and the majority are unlabeled.

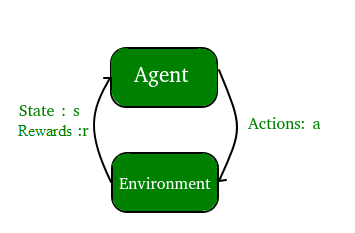

- Reinforcement learning: A computer program interacts with a dynamic environment in which it must perform a certain goal (such as driving a vehicle or playing a game against an opponent). The program is provided feedback in terms of rewards and punishments as it navigates its problem space.

2. Two most common use cases of Supervised learning are:

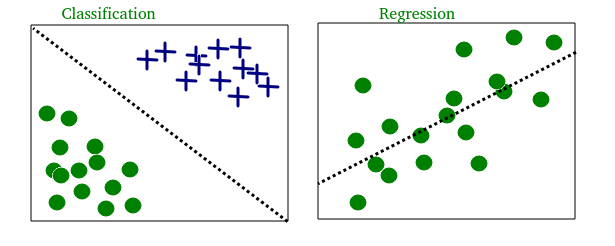

- Classification: Inputs are divided into two or more classes, and the learner must produce a model that assigns unseen inputs to one or more (multi-label classification) of these classes and predicts whether or not something belongs to a particular class. This is typically tackled in a supervised way. Classification models can be categorized in two groups: Binary classification and Multiclass Classification. Spam filtering is an example of binary classification, where the inputs are email (or other) messages and the classes are “spam” and “not spam”.

- Regression: It is also a supervised learning problem, that predicts a numeric value and outputs are continuous rather than discrete. For example, predicting stock prices using historical data.

An example of classification and regression on two different datasets is shown below:

3. Most common Unsupervised learning are:

- Clustering: Here, a set of inputs is to be divided into groups. Unlike in classification, the groups are not known beforehand, making this typically an unsupervised task. As you can see in the example below, the given dataset points have been divided into groups identifiable by the colors red, green, and blue.

- Density estimation: The task is to find the distribution of inputs in some space.

- Dimensionality reduction: It simplifies inputs by mapping them into a lower-dimensional space. Topic modeling is a related problem, where a program is given a list of human language documents and is tasked to find out which documents cover similar topics.

On the basis of these machine learning tasks/problems, we have a number of algorithms that are used to accomplish these tasks. Some commonly used machine learning algorithms are Linear Regression, Logistic Regression, Decision Tree, SVM(Support vector machines), Naive Bayes, KNN(K nearest neighbors), K-Means, Random Forest, etc. Note: All these algorithms will be covered in upcoming articles.

Terminologies of Machine Learning

- Model A model is a specific representation learned from data by applying some machine learning algorithm. A model is also called a hypothesis.

- Feature A feature is an individual measurable property of our data. A set of numeric features can be conveniently described by a feature vector. Feature vectors are fed as input to the model. For example, in order to predict a fruit, there may be features like color, smell, taste, etc. Note: Choosing informative, discriminating and independent features is a crucial step for effective algorithms. We generally employ a feature extractor to extract the relevant features from the raw data.

- Target (Label) A target variable or label is the value to be predicted by our model. For the fruit example discussed in the features section, the label with each set of input would be the name of the fruit like apple, orange, banana, etc.

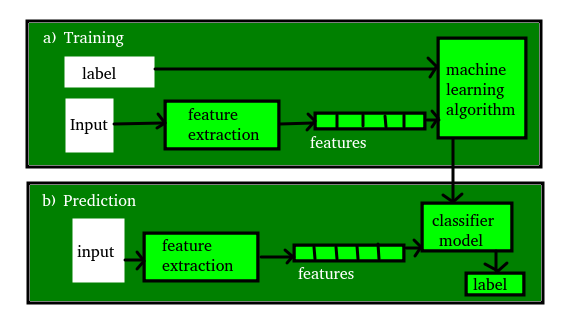

- Training The idea is to give a set of inputs(features) and its expected outputs(labels), so after training, we will have a model (hypothesis) that will then map new data to one of the categories trained on.

- Prediction Once our model is ready, it can be fed a set of inputs to which it will provide a predicted output(label). But make sure if the machine performs well on unseen data, then only we can say the machine performs well.

The figure shown below clears the above concepts:

Here are the steps to get started with machine learning:

- Define the Problem: Identify the problem you want to solve and determine if machine learning can be used to solve it.

- Collect Data: Gather and clean the data that you will use to train your model. The quality of your model will depend on the quality of your data.

- Explore the Data: Use data visualization and statistical methods to understand the structure and relationships within your data.

- Pre-process the Data: Prepare the data for modeling by normalizing, transforming, and cleaning it as necessary.

- Split the Data: Divide the data into training and test datasets to validate your model.

- Choose a Model: Select a machine learning model that is appropriate for your problem and the data you have collected.

- Train the Model: Use the training data to train the model, adjusting its parameters to fit the data as accurately as possible.

- Evaluate the Model: Use the test data to evaluate the performance of the model and determine its accuracy.

- Fine-tune the Model: Based on the results of the evaluation, fine-tune the model by adjusting its parameters and repeating the training process until the desired level of accuracy is achieved.

- Deploy the Model: Integrate the model into your application or system, making it available for use by others.

- Monitor the Model: Continuously monitor the performance of the model to ensure that it continues to provide accurate results over time.

Example :

Here is a simple machine-learning example in Python that demonstrates how to train a model to predict the species of iris flowers based on their sepal and petal measurements:

- Python

output:

Test accuracy: 0.9666666666666667

Related Articles:

- Demystifying Machine Learning

- Machine Learning Applications

References:

- https://en.wikipedia.org/wiki/Machine_learning

- https://leonardoaraujosantos.gitbooks.io/artificial-intelligence/

- http://machinelearningmastery.com/data-terminology-in-machine-learning/

Introduction :

- Getting Started with Machine Learning

- An Introduction to Machine Learning

- What is Machine Learning ?

- Introduction to Data in Machine Learning

- Demystifying Machine Learning

- ML – Applications

- Best Python libraries for Machine Learning

- Artificial Intelligence | An Introduction

- Machine Learning and Artificial Intelligence

- Difference between Machine learning and Artificial Intelligence

- Agents in Artificial Intelligence

- 10 Basic Machine Learning Interview Questions

Data and It’s Processing:

- Introduction to Data in Machine Learning

- Understanding Data Processing

- Python | Create Test DataSets using Sklearn

- Python | Generate test datasets for Machine learning

- Python | Data Preprocessing in Python

- Data Cleaning

- Feature Scaling – Part 1

- Feature Scaling – Part 2

- Python | Label Encoding of datasets

- Python | One Hot Encoding of datasets

- Handling Imbalanced Data with SMOTE and Near Miss Algorithm in Python

- Dummy variable trap in Regression Models

Supervised learning :

- Getting started with Classification

- Basic Concept of Classification

- Types of Regression Techniques

- Classification vs Regression

- ML | Types of Learning – Supervised Learning

- Multiclass classification using scikit-learn

- Linear Regression :

- Introduction to Linear Regression

- Gradient Descent in Linear Regression

- Mathematical explanation for Linear Regression working

- Normal Equation in Linear Regression

- Linear Regression (Python Implementation)

- Simple Linear-Regression using R

- Univariate Linear Regression in Python

- Multiple Linear Regression using Python

- Multiple Linear Regression using R

- Locally weighted Linear Regression

- Generalized Linear Models

- Python | Linear Regression using sklearn

- Linear Regression Using Tensorflow

- A Practical approach to Simple Linear Regression using R

- Linear Regression using PyTorch

- Pyspark | Linear regression using Apache MLlib

- ML | Boston Housing Kaggle Challenge with Linear Regression

- Python | Implementation of Polynomial Regression

- Softmax Regression using TensorFlow

- Naive Bayes Classifiers

Unsupervised learning :

- ML | Types of Learning – Unsupervised Learning

- Supervised and Unsupervised learning

- Clustering in Machine Learning

- Different Types of Clustering Algorithm

- K means Clustering – Introduction

- Elbow Method for optimal value of k in KMeans

- Random Initialization Trap in K-Means

- ML | K-means++ Algorithm

- Analysis of test data using K-Means Clustering in Python

- Mini Batch K-means clustering algorithm

- Mean-Shift Clustering

- DBSCAN – Density based clustering

- Implementing DBSCAN algorithm using Sklearn

- Fuzzy Clustering

- Spectral Clustering

- OPTICS Clustering

- OPTICS Clustering Implementing using Sklearn

- Hierarchical clustering (Agglomerative and Divisive clustering)

- Implementing Agglomerative Clustering using Sklearn

- Gaussian Mixture Model

Reinforcement Learning:

Dimensionality Reduction :

- Introduction to Dimensionality Reduction

- Introduction to Kernel PCA

- Principal Component Analysis(PCA)

- Principal Component Analysis with Python

- Low-Rank Approximations

- Overview of Linear Discriminant Analysis (LDA)

- Mathematical Explanation of Linear Discriminant Analysis (LDA)

- Generalized Discriminant Analysis (GDA)

- Independent Component Analysis

- Feature Mapping

- Extra Tree Classifier for Feature Selection

- Chi-Square Test for Feature Selection – Mathematical Explanation

- ML | T-distributed Stochastic Neighbor Embedding (t-SNE) Algorithm

- Python | How and where to apply Feature Scaling?

- Parameters for Feature Selection

- Underfitting and Overfitting in Machine Learning

Natural Language Processing :

- Introduction to Natural Language Processing

- Text Preprocessing in Python | Set – 1

- Text Preprocessing in Python | Set 2

- Removing stop words with NLTK in Python

- Tokenize text using NLTK in python

- How tokenizing text, sentence, words works

- Introduction to Stemming

- Stemming words with NLTK

- Lemmatization with NLTK

- Lemmatization with TextBlob

- How to get synonyms/antonyms from NLTK WordNet in Python?

Neural Networks :

- Introduction to Artificial Neutral Networks | Set 1

- Introduction to Artificial Neural Network | Set 2

- Introduction to ANN (Artificial Neural Networks) | Set 3 (Hybrid Systems)

- Introduction to ANN | Set 4 (Network Architectures)

- Activation functions

- Implementing Artificial Neural Network training process in Python

- A single neuron neural network in Python

- Introduction to Deep Q-Learning

- Implementing Deep Q-Learning using Tensorflow

ML – Deployment :

- Deploy your Machine Learning web app (Streamlit) on Heroku

- Deploy a Machine Learning Model using Streamlit Library

- Deploy Machine Learning Model using Flask

- Python – Create UIs for prototyping Machine Learning model with Gradio

- How to Prepare Data Before Deploying a Machine Learning Model?

- Deploying ML Models as API using FastAPI

- Deploying Scrapy spider on ScrapingHub

ML – Applications :

- Rainfall prediction using Linear regression

- Identifying handwritten digits using Logistic Regression in PyTorch

- Kaggle Breast Cancer Wisconsin Diagnosis using Logistic Regression

- Python | Implementation of Movie Recommender System

- Support Vector Machine to recognize facial features in C++

- Decision Trees – Fake (Counterfeit) Coin Puzzle (12 Coin Puzzle)

- Credit Card Fraud Detection

- NLP analysis of Restaurant reviews

- Applying Multinomial Naive Bayes to NLP Problems

- Image compression using K-means clustering

- Deep learning | Image Caption Generation using the Avengers EndGames Characters

- How Does Google Use Machine Learning?

- How Does NASA Use Machine Learning?

- 5 Mind-Blowing Ways Facebook Uses Machine Learning

- Targeted Advertising using Machine Learning

- How Machine Learning Is Used by Famous Companies?

Misc :

- Pattern Recognition | Introduction

- Calculate Efficiency Of Binary Classifier

- Logistic Regression v/s Decision Tree Classification

- R vs Python in Datascience

- Explanation of Fundamental Functions involved in A3C algorithm

- Differential Privacy and Deep Learning

- Artificial intelligence vs Machine Learning vs Deep Learning

- Introduction to Multi-Task Learning(MTL) for Deep Learning

- Top 10 Algorithms every Machine Learning Engineer should know

- Azure Virtual Machine for Machine Learning

- 30 minutes to machine learning

- What is AutoML in Machine Learning?

- Confusion Matrix in Machine Learning

Prerequisites to learn machine learning

- Knowledge of Linear equations, graphs of functions, statistics, Linear Algebra, Probability, Calculus etc.

- Any programming language knowledge like Python, C++, R are recommended.

.png)

Thank you for giving a good material

ReplyDelete